Kafka

What is it commonly used for?

- Stream Processing

- Website Activity Tracking

- Metrics Collection and Monitoring

- Log Aggregation

Publisher/ Consumer Model

- There are 4 main roles in its model:

- Topic

- Publisher

- Consumer

- Broker

- At the high level, it is typical pub/sub model like other messaging systems do. The differences of it are the message volume it can deal with and the speed of it. We will talk about this later.

- Communication between the clients and the servers is done with a simple, high-performance, language agnostic TCP protocol. Java client for Kafka is available and many other languages are available as well.

- a Kafka cluster consists of one or more servers, called Brokers that manage the persistence and replication of message data (i.e. the commit log)

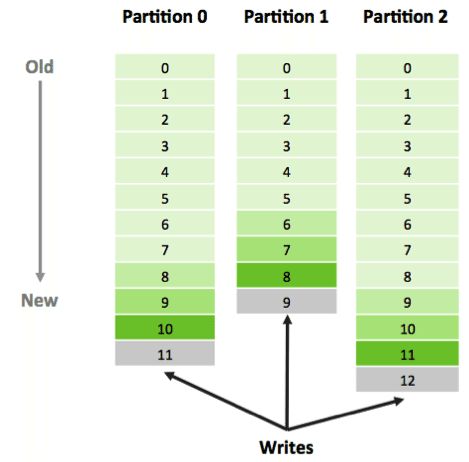

- One of the keys to Kafka’s high performance is the simplicity of the brokers’ responsibilities. In Kafka, topics consist of one or more Partitions that are ordered, immutable sequences of messages. Since writes to a partition are in sequence via file append, this design greatly reduces the number of hard disk seeks (with their resulting latency).

- The messages in the partitions are each assigned a sequential id number called the offset that uniquely identifies each message within the partition.

- The Kafka cluster retains all published messages—whether or not they have been consumed—for a configurable period of time.

- Kafka's performance is effectively constant with respect to data size so retaining lots of data is not a problem.

- The partitions in the log serve several purposes. First, they allow the log to scale beyond a size that will fit on a single server. Each individual partition must fit on the servers that host it, but a topic may have many partitions so it can handle an arbitrary amount of data. Second they act as the unit of parallelism.

- Each partition is replicated across a configurable number of servers for fault tolerance.

- Each partition has one server which acts as the "leader" and zero or more servers which act as "followers". The leader handles all read and write requests for the partition while the followers passively replicate the leader. If the leader fails, one of the followers will automatically become the new leader. Each server acts as a leader for some of its partitions and a follower for others so load is well balanced within the cluster.

- Producers publish data to the topics of their choice. The producer is responsible for choosing which message to assign to which partition within the topic. This can be done in a round-robin fashion simply to balance load or it can be done according to some semantic partition function (say based on some key in the message).

Can we use it as a queue?

- The abstraction of consumer group makes both queue and pub/sub model possible.

- Consumers label themselves with a consumer group name, and each message published to a topic is delivered to ONE consumer instance within each subscribing consumer group. Consumer instances can be in separate processes or on separate machines.

- If all consumer instances have the same consumer group, this works just like a traditional queue balancing load over the consumers.

- If all consumer instances have different consumer groups, then this works like publish-subscribe and all messages are broadcast to all consumers.

- For Kafka consumers, keeping track of which messages have been consumed (processed) is simply a matter of keeping track of an Offset, which is a sequential id number that uniquely identifies a message within a partition.

A queue can be consumed in parallel

- How to guarantee the order of delivery to the consumer instances in the consumer group if they work in parallel and the messages are sent in asynchronous fashion? Traditionally, we need to do that thru "exclusive consumer" that is single consumer instance. So, we sacrify the parallellism in exchange of orderness.

- Kafka can do a bit better as you can assign partition of a topic to single consumer instance and have all messages of a key going to a partition. That is to say, if you can separate messages by key and order across the keys doesn't matter, you can achieve parallelism across the keys and message orderness per key.

What guarantee Kafka provides?

At a high-level Kafka gives the following guarantees:

- Messages sent by a producer to a particular topic partition will be appended in the order they are sent.

- A consumer instance sees messages in the order they are stored in the log.

- For a topic with replication factor N, we will tolerate up to N-1 server failures without losing any messages committed to the log.

Why is it so fast?

Append-only write.

- The performance of linear writes on a JBOD configuration with six 7200rpm SATA RAID-5 array is about 600MB/sec but

- The performance of random writes is only about 100k/sec - a difference of over 6000X !!

- These linear reads and writes are the most predictable of all usage patterns, and are heavily optimized by the operating system.

- A modern operating system provides read-ahead and write-behind techniques that prefetch data in large block multiples and group smaller logical writes into large physical writes.

- A further discussion of this issue can be found in this ACM Queue article; they actually find that sequential disk access can in some cases be faster than random memory access!

- To compensate for this performance divergence modern operating systems have become increasingly aggressive in their use of main memory for disk caching. A modern OS will happily divert all free memory to disk caching with little performance penalty when the memory is reclaimed.

- All disk reads and writes will go through this unified cache. This feature cannot easily be turned off without using direct I/O, so even if a process maintains an in-process cache of the data, this data will likely be duplicated in OS pagecache, effectively storing everything twice.

- Since Kafka is built on top of JVM. We know that the memory overhead of objects is garbage collection becomes increasingly fiddly and slow as the in-heap data increases.

- Therefore, using the filesystem and relying on pagecache is superior to maintaining an in-memory cache or other structure. At least we double the available cache by having automatic access to all free memory, and likely double again by storing a compact byte structure rather than individual objects. Doing so will result in a cache of up to 28-30GB on a 32GB machine without GC penalties. Furthermore this cache will stay warm even if the service is restarted, whereas the in-process cache will need to be rebuilt in memory (which for a 10GB cache may take 10 minutes) or else it will need to start with a completely cold cache (which likely means terrible initial performance). This also greatly simplifies the code as all logic for maintaining coherency between the cache and filesystem is now in the OS, which tends to do so more efficiently and more correctly than one-off in-process attempts. If your disk usage favors linear reads then read-ahead is effectively pre-populating this cache with useful data on each disk read.

- This style of pagecache-centric design is described in an article on the design of Varnish here (along with a healthy dose of arrogance).

- Batch operations

- Zero-copying via sendfile

- Tackle network bandwidth limit via batch compression. Kafka supports GZIP and Snappy compression protocols

Broker is not responsible for keeping track of what messages have been consumed – that responsibility falls on the consumer. In contrast, in traditional messaging systems such as JMS, the broker bore this responsibility, severely limiting the system’s ability to scale as the number of consumers increased.

Reference

- https://engineering.linkedin.com/distributed-systems/log-what-every-software-engineer-should-know-about-real-time-datas-unifying

- http://www.confluent.io/blog/compression-in-apache-kafka-is-now-34-percent-faster

- http://www.confluent.io/blog/apache-kafka-samza-and-the-unix-philosophy-of-distributed-data